Artificial intelligence against deforestation

Artificial intelligence against deforestation

Identifying and measuring deforestation and the cultivation of specific commodities at a large scale and in sufficient detail is notoriously difficult.

Identifying the link between specific commodities and areas at risk of deforestation, can be extremely complex. At Barry Callebaut, we require our suppliers, such as palm and soy suppliers, to identify the forest areas that need protecting, and those that can be developed for agriculture. However, conducting this type of assessment is difficult, time consuming and, often, a costly exercise.

We needed a solution to support our suppliers that was more efficient, less expensive, and had the ability to scale. Moreover, we knew that artificial intelligence was already being used for climate change strategies, such as for predicting droughts, cloud cover, and greenhouse gas emissions.

The question we, therefore, asked ourselves was, how can we leverage existing deforestation methodology with artificial intelligence?

To answer that question, we teamed up with EcoVision Lab, part of the Photogrammetry and Remote Sensing group at ETH Zurich, who have the capability to develop highly automated artificial intelligence solutions. The group has long-standing experience in combining machine learning (deep learning) with remote sensing to address ecological challenges. The team at ETH Zurich is utilizing data from a NASA laser scanner attached to the International Space Station and imagery from the European Space Agency (ESA), which allows large areas to be mapped by applying artificial intelligence, limiting on-the-ground measurements to only very critical locations.

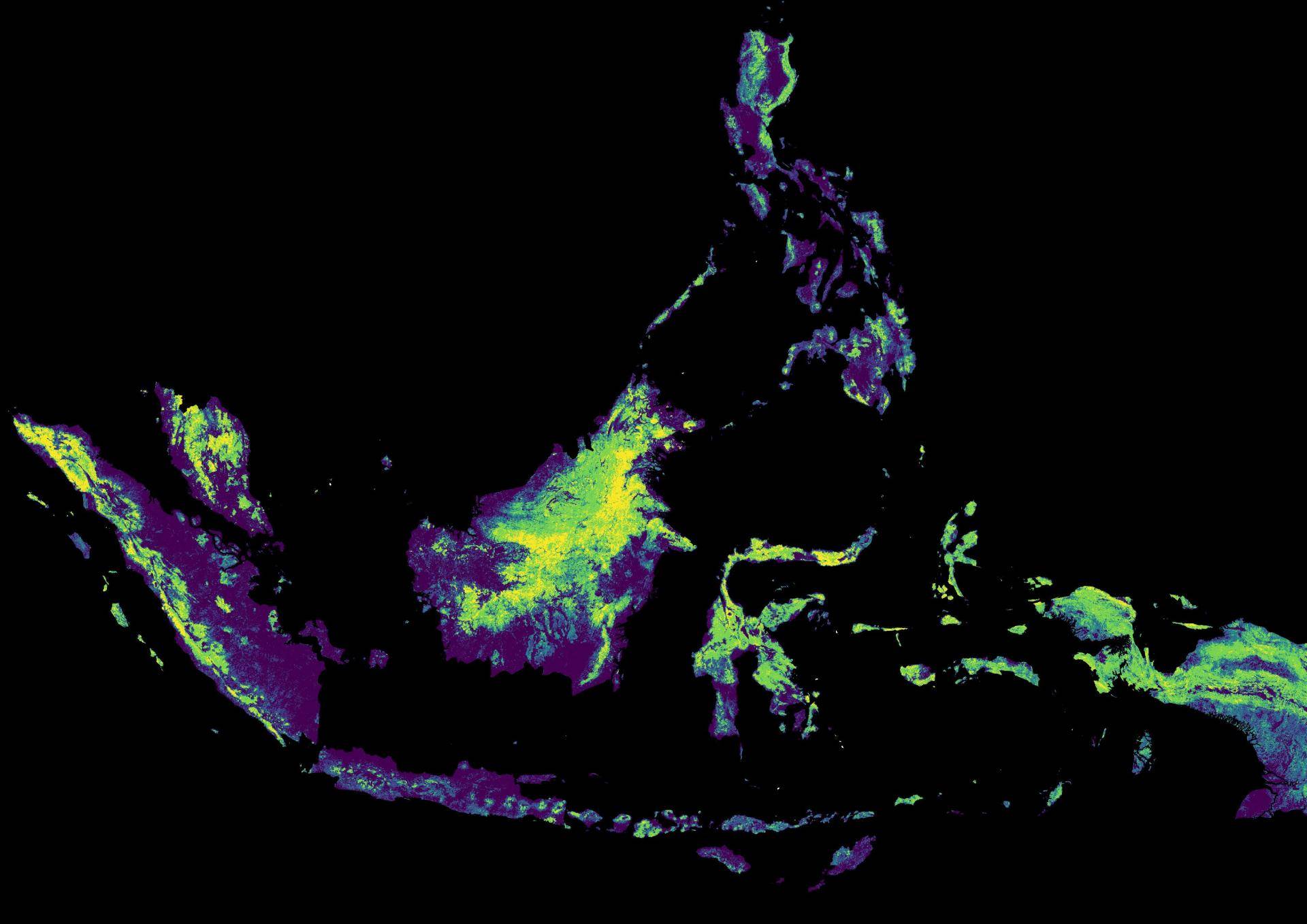

This collaboration led to the development of a publicly available, industry-first, indicative High Carbon Stock (HCS) map that identifies forests with high conservation value and areas where deforestation would cause the highest carbon emissions.

Building on best in class approaches

The development of the HCS map greatly supports the current approach of data taken from the field, which until now is the widely used approach to measure the link between commodity cultivation and deforestation, the so-called High Carbon Stock Approach (HCSA).

This new method combining deep learning and publicly available satellite imagery is a true breakthrough because it provides a highly automated, transparent, objective tool that generates indicative HCS maps at global scale with unprecedented accuracy.

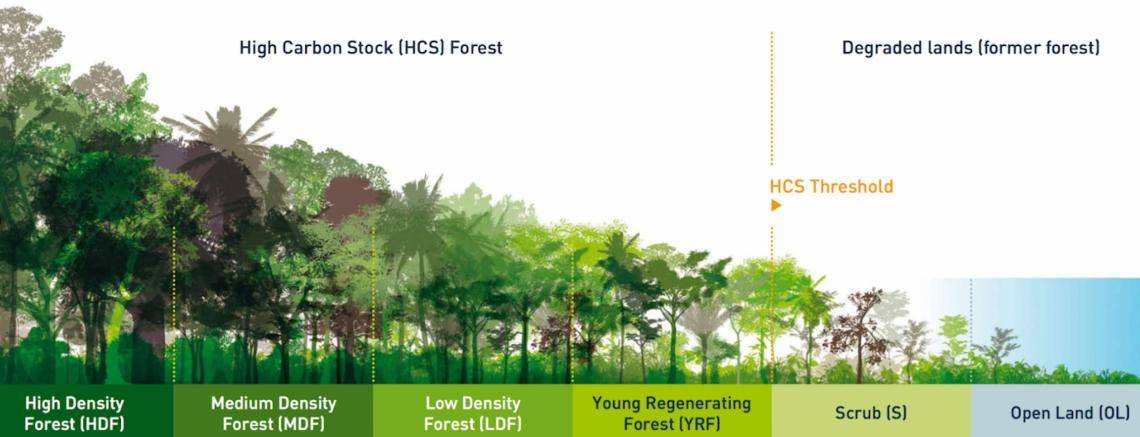

In broad terms, the HCSA classifies the vegetation of an area of land into different carbon stock classes. This is done using a combination of mapping using remote sensing and field data. In the field, tree species, size and number are recorded and used to calculate biomass and carbon content of the carbon stock classes. The first four classes are considered potential High Carbon Stock forests.

Source: highcarbonstock.org

Building on best in class approaches

HCSA is a widely recognized methodology that is increasingly being used by certification standards, such as the Roundtable on Sustainable Palm Oil (RSPO), and by companies that are committed to breaking the link between deforestation and land development in either their operations or supply chain. The reliance on ground and aerial imagery for HCSA is challenging, because manually measuring landscapes and evaluating vegetation classes is labor-intensive and difficult to roll out at scale, whilst using planes equipped with specialized laser scanners is an expensive option.

Combining HCSA with the predictive power of artificial intelligence

Implementing artificial intelligence solutions, all starts with data quality. Deep learning is a research field with a very fast pace. New algorithms are improving quickly and are demonstrating the potential to revolutionize forest monitoring and carbon stock estimation based on satellite images. However, when relying on supervised learning, that is, learning from large reference datasets, the amount and quality of data is the key to success. Over the past four years, the ETH team has focussed on utilizing the new satellite imagery and calibrating regional carbon biomass data. As a result, we have developed a tool that is highly automated, objective and can be used to up-scale indicative HCS mapping to entire world regions.

Being carbon and forest positive is one of the key commitments of Forever Chocolate. These maps are the result of a 4-year collaboration with one of the leading research institutions combining artificial intelligence with remote sensing for ecosystem protection. By partnering together we have created an innovative and scalable solution that can be accessed by all.

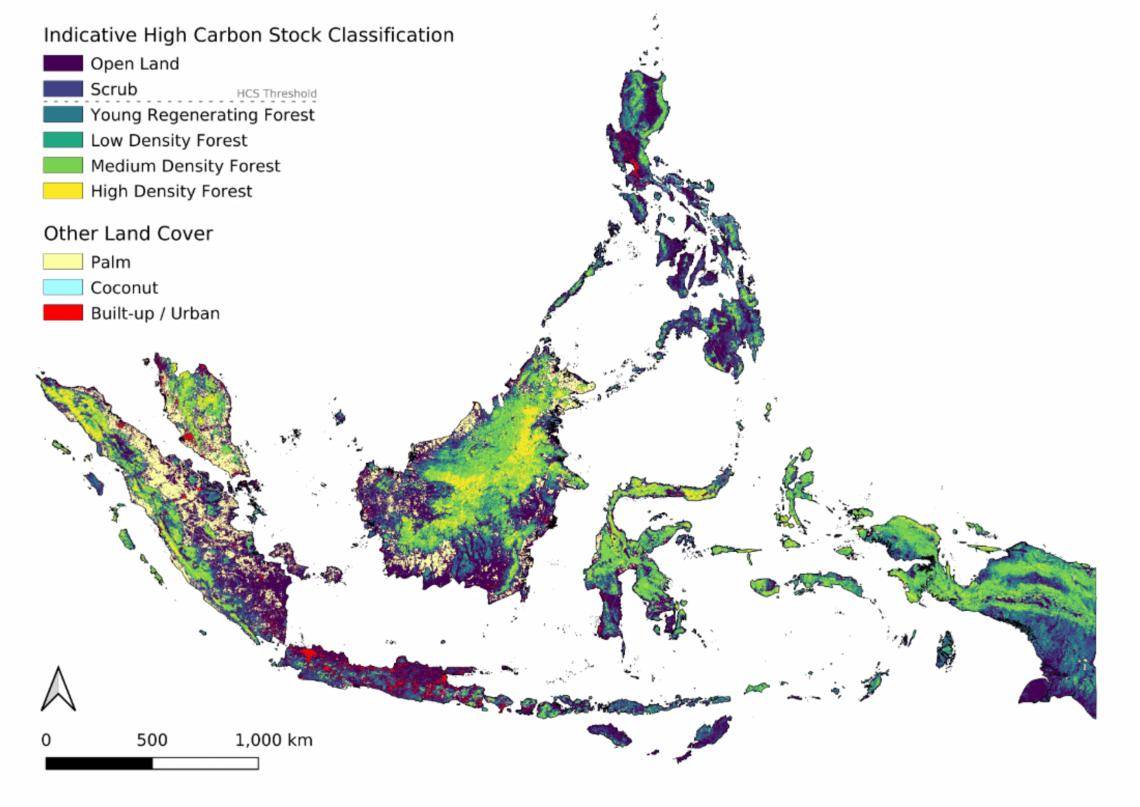

Indicative High Carbon Stock Classification

Indicative High Carbon Stock Classification.

Our indicative HCS map shows which areas are identified as so-called High Carbon Stock forests. These natural forests store a high amount of carbon in the form of biomass. In addition, the map also shows degraded lands (open land and scrub) that store less carbon. Our first high-resolution indicative HCS forest map for Indonesia, Malaysia, and the Philippines is a land-use planning tool. It allows efficient use of available resources to identify forests that should be protected for conservation and degraded lands that may potentially be developed.

Image from Nico Lang, data from EcoVision Lab ETH Zürich. Built-up data: © Copernicus Service Information 2019

We welcome this innovative new HCS indicative map by ETH Zurich and Barry Callebaut. Mapping the HCS forests in tropical forest regions globally is a first step towards protecting them from deforestation. HCSA is keen to build upon this work from Barry Callebaut and ETH to make high-quality HCS maps available for anyone to use to implement no deforestation, and in particular smallholder farmers.

Southeast Asia and beyond

Today’s launch of this large-scale HCS map marks a pivotal moment in our exciting journey with ETH Zurich, that could ripple well beyond our own chocolate and cocoa supply chain.

We are passionate about addressing the biggest challenges in our industry and we can only do so by working together, and by consistently pushing the needle to find innovative solutions.

Interested in learning more about our large-scale HCS maps?

For more information, please contact :

Oliver von Hagen

Director Global Ingredients Sustainability, Barry Callebaut

[email protected]